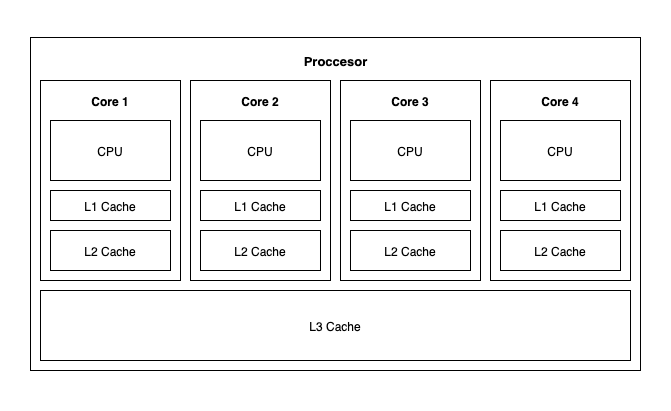

In order for a CPU to see all the different memories as a single fast and large memory component (see memory hierarchy) and to boost the performance by not going to the main memory to fetch the data, modern processors rely heavily on caching. Processors have multilevel caches after which, in case of a cache miss, load data from the main memory.

In a multicore CPU, where each core has its own private cache, the problem arises when executing a multithreaded program in parallel on all those cores and those threads have to communicate through shared memory. Let’s say we have thread T1 on CPU core C1 loads value of 5 from memory location 0xA and stores it in its cache for future use. Then thread T2 on core C2 loads data from the same location, updates the value to 10 and, depending on the cache write policy, writes the updated value back to main memory. When T1 again loads data from that location, but this time from its local cache, it gets the stale value of 5 instead of 10. This is the state of incoherent memory. In order to have a correct multithreaded program, every thread has to see the newly updated value of a shared variable.

The solution to this problem, the way in which all threads in a multithreaded program see the newest value of a shared variable, is cache coherence protocols that control the caches to avoid stale cache lines. The basic idea is to maintain a coherent view of data values in all caches and when some data is updated that the update is reflected in all caches.

In the context of memory consistency models, which deal and define loads/stores ordering of all memory address locations, cache coherence deals only with a single address location.

Cache coherence can be handled in software and in hardware, and generally, it is a combination of two. Different hardware platforms provide different degrees of cache coherence (meaning different levels of data synchronization between CPU caches) then it is up to the software to secure additional coherence if and when it needs to. Hardware’s ISA provides special instructions that the software (generally our compilers) can insert in certain places in order to achieve greater memory coherence (this is done by preventing loads and store reordering or making a variable non-cacheable so it is written and read directly from main memory). In this post, I’ll briefly discuss some basics of hardware coherence protocols. One thing to mention is that examples I’ll provide will be in relation to different cores inside a single CPU, but everything applies to multiprocessor systems.

Cache coherence requirements

In order to have a cache coherent system, two requirements have to be fulfilled:

Write propagation – every write, or every change, of some memory location that is cached in one cache must be propagated to all other caches that hold a copy of that same location. All CPU cores have to see the updated value. For example, if T1 on CPU core C1 updates some variable, that write has to be propagated to all other caches so they can update or invalidate their copy. Updating or invalidating will be discussed in a minute.

Write serialization – all writes and reads to a certain memory location must happen in a single global order that is seen by all CPU cores. The reason for this is that if, for example, T1 on CPU core C1 updates its cached copy of a location 0xA from 1 to 5. Then T2 on core C2 its cached copy of the same location updates to 10. It is important for core C3, when reading same memory location, to know that C2 was the last that updated the data so it can read the latest change, the value of 10.

How to ensure write serialization

There are basically two mechanisms for ensuring write serialization, snooping and directory-based protocols.

Snooping-based protocols – caches snoop (observe) each other’s read/write operations. Each cache broadcasts its read/write operation on a single common bus which connects all cores. All other caches react to that message appropriately. This way a cache is aware of all operations done by other caches and knows which cache has the latest changes made to a particular cache line. Also knows as snoopy protocols, they are simpler and generally faster, but not so scalable if there is a large number of processors in a system.

Directory-based protocols – a logically-central directory keeps track of where the copies of each cache line reside and caches consult this directory to ensure coherence. This directory coordinates all actions made by caches and that way preserving the order that is needed for a coherent state. Directory-based protocols are more scalable then snoopy protocols and are used in systems with a large number of CPUs.

How to ensure write propagation

Most common ways to ensure write propagation property are two snoopy-based methods, write-invalidate, and write-update.

Write-invalidate protocols – when a CPU core writes to some memory location, all other cores observe that write (by snooping the bus) and invalidate their cached copy for that location so every future read is read from main memory instead of the cache. These are the most commonly used protocols.

Write-update protocols – CPU core, when writing data to some memory location, broadcasts the write operation as well as the data that is going to be written. All other caches listening on the common bus will respond to that message by updating their cached copies with new data. Because the operation that is performed, as well as the data travels the bus, this broadcast requires more bandwidth to be used which is the reason why this method is less commonly used.

Summary

For a correct multithreaded parallel execution, it is important that every thread has to have a coherent view of shared memory. Cache coherence is one discipline that enables this requirement. For some detailed discussion about the topic, I highly recommend this and this lecture from Carnegie Mellon Computer Architecture course. Besides these two lectures, I recommend everyone to watch the whole course 🙂